AI’s Growth Paradox: Why Data Centers Matter More Than Ever

By

Virta Ventures

ON

March 11, 2025

At Virta, we’ve been tracking the need for data center development for a while now, writing and investing in various software plays supporting data infrastructure development and operations. Thus, we were excited when the Stargate Project was announced, signaling demonstrated American investment in this space. The coinciding release of DeepSeek-R1 was a shocking revelation that had us questioning our assumptions of the resource-intensity of foundational model-building. A question that kept coming up: if AI models can be trained for a fraction of the energy / compute cost, is there still an urgent need for AI data processing infrastructure development?

We think so. Plummeting costs of foundational model development democratize AI capabilities and open up a door to the emergence of verticalized AI models addressing specialized use cases across industries.

Subscribe to receive our updates and insights directly in your inbox...

Less resource-intensive models heralds the training of more models.

DeepSeek made headlines for challenging our conventional wisdom that foundational model-training required prohibitive amounts of capital and resources. But, DeepSeek isn’t unique in possessing this “secret sauce” of resource-efficient AI model development. AI researchers at Stanford and the University of Washington, according to a new research paper released in the past few weeks, were able to train an AI “reasoning” model similar in capability to OpenAI’s o1 or DeepSeek’s R1 for under $50 in compute credits.

While previously the development of specialized foundational models in vertical industries seemed infeasible because of the perceived resource-intensity, recent developments in model development at low compute costs question this assumption. It appears, at our current status quo of AI maturity, it is possible to train up specialized reasoning models for specific use cases at a low compute cost.

This makes the advent of DeepSeek and other resource-efficient models a tailwind to data center energy demand, not a headwind. We expect to see more specialized models addressing reasoning problems in a diverse set of use cases emerge in the near future – ultimately increasing our net need for compute and energy demand.

We’re seeing that the tech industry is starting to align with this thinking. Satya Nadella recently posted about the age-old Jevons Paradox for this reason. In the same way that, in the 19th century, lowering the cost of using fuel increased, not decreased, demand for fuel, we’ll see a similar increase in demand of AI technology as the cost of training and operating AI decreases.

More model development means more data center infrastructure demand.

While an initial reaction to DeepSeek may have been that infrastructure bets like the Stargate Projects are made obsolete by model resource efficiency, this aforementioned rapid increase in AI model development / usage justify the need for more data center infrastructure.

More models and more use of each model represents an exponential growth in data center capacity demand. Thus, while at the end of 2024 the DOE projected that data center load growth would double or triple by 2028, recent developments might herald a steeper growth curve for capacity demand.

What this means: while existing planned project growth is designed to meet the data processing needs within a 2X or 3X demand scenario, we’ll need to accelerate project development to meet our new reality of data center capacity demand growth. We need to break ground on new projects in ways that can get enough data center infrastructure online to allow AI to be the new oil.

In building to meet demand, a few objectives come to mind.

To achieve the necessary rapid pace of infrastructure growth, data center development companies need to do a few things….

First, companies need to move faster. In accelerating infrastructure development to meet demand, there is a major danger of becoming slowed down by managerial tasks or regulatory hoops. Here, logistical / operational inefficiency becomes the primary adversary of AI progress.

Second, companies must minimize costs. By making new development more cost-efficient, companies enable themselves to do more of it. Minimizing costs makes putting infrastructure into the ground much easier, making it easier to build more of it.

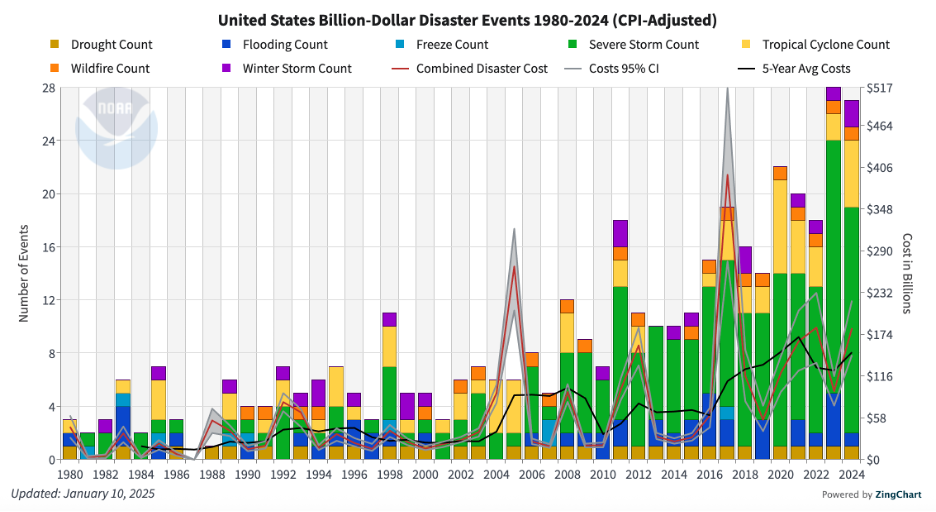

Third, companies must ensure infrastructure resilience. From construction through operations, industry experts like those at the Lawrence Livermore National Labs have warned us about how extreme weather events like droughts and extreme heat events in California can have a significant negative impact on data center development and operations. Extreme climate events have only continued to rise since then, with the LA wildfires putting these risks into public view. Increasingly common disasters also bring new challenges to infrastructure: for instance, fires like those in LA can clog air cooling systems via smog and ash; hurricanes can halt data center operations entirely. Protecting essential infrastructure from increasing climate instability from the development phase through the operational phase will become crucial in coming years.

Last, companies must minimize built environment emissions. As we build essential infrastructure for progress, it’s still important to note that infrastructure is responsible for 79 per cent of all greenhouse gas emissions per the UN. Companies like Meta with sustainability commitments must find low-emissions ways to build data center infrastructure, a challenge considering the urgency of data processing demand.

Startup innovation makes achieving these objectives easy.

Solutions that can move the needle on all of these fronts are the winners of this next generation of startups. We’re lucky to have invested in a few of these future winners enabling data center infrastructure growth, including Kaya AI. The team, who recently announced their $5.3MM pre-seed raise, has built a construction AI platform that helps address all of these objectives.

Moving Faster

Velocity of execution starts with smart planning. With essential data center infrastructure, for instance, where considerations like site selection, interconnection, and “bring your own power” (BYOP) solution deployment complicate projects, the cost and performance estimation that is necessary to plan and deploy becomes crucial. Virta portfolio company ACTUAL is building a unified platform solving this problem. By unifying the scientific and financial models necessary for accurate cost and performance estimation and bringing multiplayer tools into the fold for collaboration within teams and with third-party experts, ACTUAL is able to tackle the diverse challenges of large-scale infrastructure challenges. Designed for multi-billion dollar projects and portfolios, ACTUAL derisks development and construction of essential infrastructure via dramatically smarter and more collaborative planning – helping teams move faster with fewer roadblocks to development.

Following the planning process, getting a project into play requires complex siting and permitting processes that slow down execution. Virta portfolio company Shovels addresses this by delivering developers clean and useful building permit data that is easy to understand. By streamlining an otherwise time and labor-intensive process of gathering data into relevant sources, Shovels helps developers move more quickly and break ground on more projects.

For example, recent Shovels data reveals that data center development is concentrated in specific regions, with King County (WA), Santa Clara County (CA), and Multnomah County (OR) leading the nation in data center permits. This geographic clustering demonstrates the strategic importance of location when scaling infrastructure, energy resources, transmission lines, and more to support AI demand.

To allow these projects in play to move faster, Kaya AI streamlines project management to minimize the time teams spend “churning” on tasks vs. hitting milestones, allowing teams to proactively avoid delays. For instance, Kaya’s autonomous agents work with email, text message, and phone calls and save project managers hundreds of hours a month by actually retrieving and inputting information on customers’ behalf instead of requiring them to manually input it. These types of innovations save teams time on labor-intensive tasks, freeing up time that can then be spent executing on other critical initiatives — ultimately speeding up execution and moving projects forward.

Minimizing Costs

In streamlining project development, Kaya AI also allows development companies to minimize operational costs – more time spent hitting milestones and less time “churning” on tasks means shorter projects overall, reducing labor / operational costs per build. Through their platform, Kaya AI dramatically decreases the cost profile of new infrastructure development and allows for more project completions on the same amount of development budget.

Maximizing Resilience

Within the development phase, Kaya AI is able to track risks to projects from global supply chain disruptions and provide insights to help developers make decisions and keep everything on track for project delivery. Through this, Kaya can keep project development moving forward even in the face of major climate-related events that might disrupt timelines.

A moonshot idea for maximizing climate resilience in the operational phase: removing data center infrastructure from the planet altogether and sending it into space. Star Cloud (YC S24) is working to build data centers in space that are not subject to the same climate change-related risks that on-Earth data center infrastructure might incur. More-efficient solar energy generation eliminates reliance on any vulnerable grid systems, and free-floating data center modules eliminate the potential impacts of other environmental risks like extreme weather events.

Minimizing Emissions

Sustainable sourcing is the first step to minimizing embedded carbon within data center infrastructure. Kaya AI allows for developers to more easily work with suppliers to source building materials, making it even easier to source sustainable materials for data center project development.

In terms of hardware plugging into a data center’s data processing infrastructure, data center developers have limited choices due to data processing performance being top priority. Thus, data center hardware OEMs must make decarbonization a top priority in order to help the full data center value chain reduce built environment emissions. Virta portfolio company Sluicebox can help OEMs achieve this. With their instant, scientifically vetted carbon calculations for hardware design, Sluicebox helps OEMs more easily design low-carbon hardware. With these design improvements, hardware companies can ultimately help center developers reduce embedded carbon within data processing architecture.

We’re seeking startups building the innovation layer for sustainable data center development.

We believe that the exponential growth of AI development and use presents both a climate risk as well as an opportunity for new innovation to help developers and operators mitigate this risk and unlock sustainable AI growth. And, with our investments in companies like Kaya AI, Actual, Shovels, Sluicebox, and Mercury, we continue to seek opportunities to invest in great founders working to achieve sustainable AI development.

Are you building a solution for sustainable data center development? Reach out here.

Subscribe to receive our updates and insights directly in your inbox...

insights

We regularly publish thought pieces where we share lessons from renowned investors and delve into strategies for investing in the transformation of vital physical industries.