AI Data Centers – Opportunities to Mitigate Climate Setbacks

By

Virta Ventures

ON

July 30, 2024

We’ve written previously about the power demands of state-of-the-art AI: as a reminder, generating one image via AI requires as much energy as fully charging your smartphone. Training GPT-4 required over 50 gigawatt-hours of power, 50 times the amount of power required to train GPT-3.

With AI advancement comes an increase in power demand for the data centers that power AI technology. According to Goldman Sachs research, total AI-driven data center power demand will increase by 7X between now and 2030. Tech companies are seeing AI infrastructure investments as an arms race: both Sundar Pichai and Mark Zuckerberg recently called out that underinvesting in AI infrastructure poses a dramatically higher risk than overinvesting in AI infrastructure. To avoid getting left behind in the AI arms race, companies are prioritizing infrastructure growth and leaving sustainability commitments by the wayside. Google, for example, recently walked back their carbon neutrality commitment after having their power demands rise 50% over the last 5 years due to their AI efforts.

With these energy and sustainability challenges come opportunities for equity-light innovations to catalyze positive impact. Over the coming weeks, we’ll cover opportunities for software to optimize data center siting and planning, facility construction, and steady-state operations to address AI’s effects on energy demand and the climate. Today, we’re going deep on data center site selection and the cascading energy supply considerations that come with it.

Identifying a Data Center Site

Site identification is a juggling act, balancing data processing needs – including speed and security – while minimizing energy consumption, costs, and emissions. Software simplifies the identification and weighing of data points in site selection.

For the factors that developers examine when reviewing data center sites, we can look at resources from companies like Digital Realty to get to a shortlist of four key areas of interest:

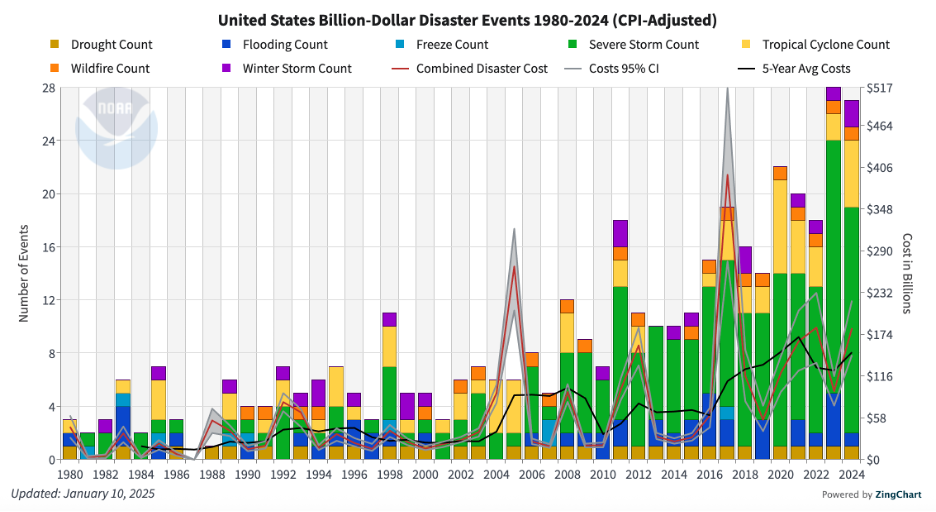

- Location: Location is key for reliable operations – reliability predictors include potential for natural disasters and other environmental hazards. Climate risk platforms like Jupiter and Terrafuse AI can help developers easily assess near-term climate risk on potential sites. As these risk factors evolve, AI can power supercharged platforms that can forecast risk further along into a data center’s operational lifecycle.

- Power: Power procurement plays a very significant part in site selection. Developers look at grid maturity, grid access, energy rates, and existence of alternative power sources and / or potential to construct new infrastructure. Companies like Digital Realty hoping to fulfill sustainability commitments look specifically at renewable power supply availability to manage environmental impact. Platforms like UrbanFootprint that can provide data on power availability and grid maturity help developers prioritize sites able to meet power needs and expedite the initial site discovery process. Solutions like Othersphere and Actual that can model out the costs of operating a site based on power needs / rates can add additional data points to the selection process.

- Data Infrastructure: For data centers, speed of information flow is crucial. To maintain speed, developers pay attention to a site location’s effect on data latency as latency increases as the distance between server and client device increases. For example, latency makes it such that data traveling from a data center in Columbus, Ohio to Los Angeles (about 2,200 miles away) will take 4-5 times the amount of time to arrive vs. data traveling to Cincinnati (about 100 miles away). To minimize latency and find suitable sites that feature both fiber access and close proximity to data processing infrastructure, developers can turn to GIS solutions like Esri that can map out fiber networks alongside parcels available for development.

- Additional Costs: Costs of standing up a data center vary by site and affect how a developer thinks about different sites in consideration. There may also be tax rate differences between sites and potentially tax incentives in certain areas that can make one site more attractive than the other. Digital products like Workyard’s Job Costing and Bridgit Bench that can provide easy site-by-site views of key construction, labor, and material costs can help developers accelerate data gathering and decision-making. And, platforms like Spark (YC W24) that can identify (and later execute on) any available incentives can help developers model out and reap the potential benefits of pursuing a specific site over another.

While all these factors are important, we see latency as their biggest constraint in site selection. Customers expect minimal latency and maximum performance with AI apps – motivating AI data center developers to secure data center locations close to data-processing devices. This narrows down a list of site candidates dramatically, to the point where developers building data centers for AI cannot place other constraints like grid-based energy availability as their top priorities in site selection.

Energy Supply Planning

While some data center developers might want to power their data centers with 100% grid power, identifying a location with adequate grid power to meet a data center’s full energy demands is exceptionally difficult. For developers of AI data centers who cannot prioritize energy supply in their site selection due to latency requirements, chosen sites often lack sufficient grid power. In such cases, developers must either enter interconnection queues to connect the data center to the existing power grid or consider on-site power generation.

Interconnection as a data center power supply option has become increasingly time-consuming. According to a report by Lawrence Berkeley National Laboratory, the average project spent 5 years in queue vs. 3 years in 2015 and less than 2 years in 2018. Wait times run longer for renewables and might even continue to increase due to renewable project volumes – renewable projects make up 95% of capacity in U.S. interconnection queues.

Gas-fired power plants can be interconnected in 60-70% of the time required for renewables. Because of this, there is a growing expectation that gas may unfortunately dominate net-new data center power supply. To combat this, digital solutions like Piq Energy and Tapestry that help automate planning and permitting can accelerate clean energy interconnection projects and reduce timelines. These software platforms have the power to reduce timelines for all clean energy interconnection projects in the queue, reducing the clean energy project backlog and speeding net-new clean energy projects to completion.

On-site power generation is attractive for developers that have the capital and real estate necessary to support it. While large tech companies like Google are turning to solar for on-site clean energy, land constraints might make replicating Google’s installing 1.6 million solar panels for data center energy generation difficult for other organizations.

Even if a developer has sufficient square footage available, solar power requires energy storage to ensure consistency of power supply. Virta portfolio company Tyba unlocks solar deployment in these use cases by simplifying the process of deploying / operating storage via ML predictions that optimize decision-making and accelerate the development of the energy storage infrastructure required for on-site solar.

For many developers, fuel cells (like the hydrogen fuel cells deployed by Microsoft) will offer higher efficiency and lower operating costs. That said, despite fuel cells’ dramatically smaller footprint vs. solar, land constraints might still apply. Cleantech 1.0 holdover Bloom Energy may be a big winner as their fuel cells have smaller footprints that optimize for space constraints (1MW of power produced within the space of a shipping container).

A parting note: while software platforms can bring data and tooling to the table that can motivate developers to pursue clean energy on-site generation over gas and diesel, regulatory change or technology breakthroughs are still needed for us to avoid a situation where gas dominates. While Virta isn’t a regulatory body or deeptech investor, we believe in investing in the power of software to speed the clean energy transition and motivate renewable usage through increased adoption and increased hardware efficiency. Ultimately, a combination of software and hardware buoyed by regulatory wins will move us toward a sustainable data center future.

Subscribe to our Substack to keep up with future insights in our AI x Climate series. To get in touch, connect with us here.

insights

We regularly publish thought pieces where we share lessons from renowned investors and delve into strategies for investing in the transformation of vital physical industries.